In our last blog we covered loading local data files into Snowflake via the GUI, in this blog we're going to get a little more advanced and look at how to bring in data from external cloud storage services, of which Snowflake supports three; Amazon S3, Microsoft Azure, and Google Cloud Storage.

However, in order to load in data from these external cloud storage platforms Snowflake requires something called a stage. In "Snowflake speak", a stage is the location of the data files.

To copy files into Snowflake from an external stage, such as Amazon S3, you will need to specify a named stage. This named stage acts as a pointer to the URL of the data files in the external cloud storage, while also allowing you to configure various functionality based settings.

How to Create a Stage

A stage is created using the base command below:

create or alter stage STAGE_NAMEThis command creates a new stage if it doesn't already exist and if it does exist, it alters it.

However, several additional arguments are typically required to set up the stage properly. The exact syntax and format will vary based on what platform you are connecting to but below is an example of how you might structure the command:

create or replace stage STAGE_NAME

url = 'INSERT_URL_HERE'

credentials =

directory = (enable = true)

file_format = FILE_FORMAT Explaining the additional arguments

Essential

Url = ''

- This is an essential argument which must be included when creating your external stage. It specifies the location of the external data for the stage.

File_format =

- This is an essential argument which also must be included when creating your external stage. It specifies the structure of the data files in the external stage and allows Snowflake to properly interpret the data. When specifying you need to either provide an existing file format object or define a new one.

Useful/Helpful

Directory = true

- This argument is set to true to enable a directory table to be created. A directory table acts as a virtual representation of the file system structure of your stage through which you can interact with and query metadata about your files such as file names and sizes.

Credentials =

- This arguments provides authentication details required for Snowflake to access and read from the external cloud storage services. This can be in the form of an access key, secret key, among others.

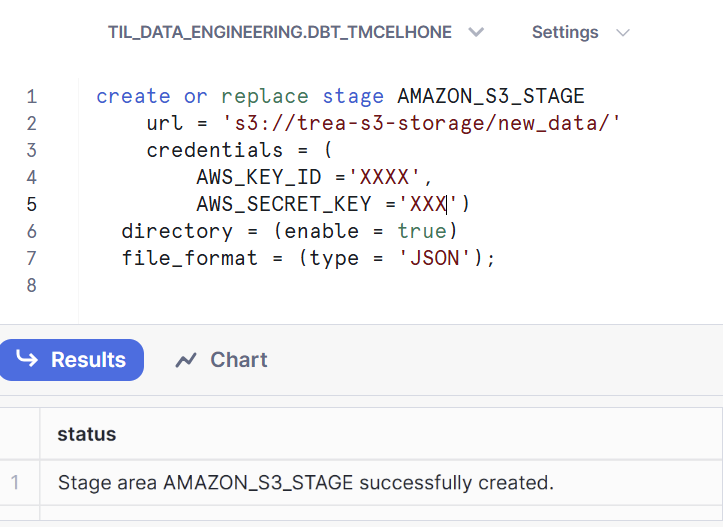

...with Amazon S3

create or replace stage STAGE_NAME

url = 's3//bucket_name/path/'

credentials = ( AWS_KEY_ID = 'XXXX', AWS_SECRET_KEY = 'XXX')

-- OR -- credentials ( AWS_ROLE = 'string' )

directory = (enable = true)

file_format = (type = 'JSON')- URL | The URL follows the format of 's3//your_bucket_name/path', just swapping out the bolded text for the details of your chosen S3 bucket.

- Credentials | For the credentials argument you need to use either IAM user credentials or IAM role credentials - as shown in the example above.

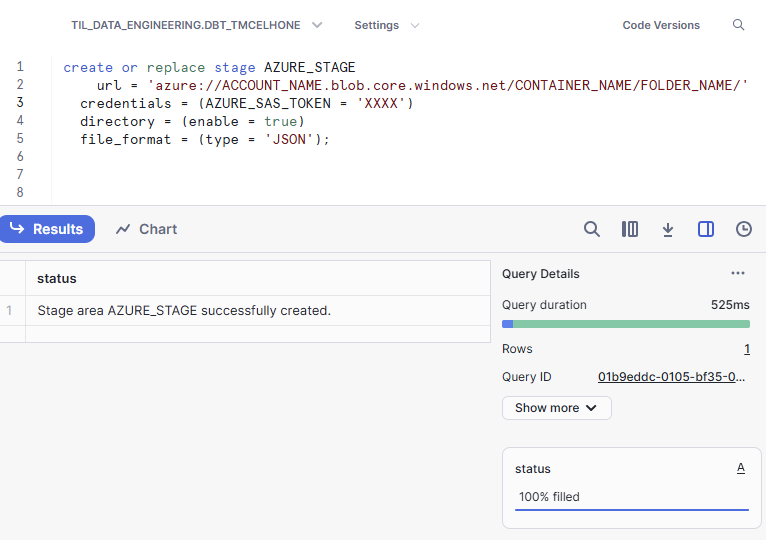

...with Microsoft Azure

create or replace stage STAGE_NAME

url = 'azure://account.blob.core.windows.net/container[/path/]

encryption = ( TYPE = 'AZURE_CSE' MASTER_KEY = 'string' | TYPE = 'NONE' )

directory = (enable = true)

file_format = FILE_FORMAT - URL | The URL follows the format of 'azure://account.blob.core.windows.net/container[/path/], just swapping out the bolded text for the details of your chosen S3 bucket.

- Credentials | I generated an SAS token to allow access to my azure container for this argument.

- Encryption | Specifies the encryption type used. Possible values are;

- AZURE_CSE: Client side encryption and requires a master key value.

- NONE: No encryption

...with Google Cloud Storage

create or replace stage STAGE_NAME

url = 'gcs://load/files/'

directory = (enable = true)

file_format = FILE_FORMAT - URL | The URL follows the format of gcs://<bucket_name>[/path/] - including the path portion if you want to direct to a specific folder.

- Credentials | You will need to provide GCP service account key credentials to allow Snowflake access to your account. For example - CREDENTIALS = (GCP_JSON_KEY = '<YOUR_KEY>')

- Encryption | Specifies the encryption type used. Possible values are;

- GCS_CSE: Client side encryption and requires a master key value.

- NONE: No encryption

Creating external stages in Snowflake is an integral step in setting up data pipelines from external cloud storage platforms like Microsoft Azure and Amazon S3. Defining stages not only specifies where to find your data files but also allows you to configure things like authentication, file format, and extra things like directory tables and encryption - all allowing Snowflake to easily access and pull in your data.

Stay tuned for Part2 where we will learn how to copy the data in using the external stages into our Snowflake tables !