A macro in Alteryx is essentially a reusable workflow. Instead of repeating the same logic multiple times, you wrap it into a macro and call it when needed — just like a custom tool. Macros can help you:

- Simplify repetitive tasks

- Organize complex logic

- Improve performance and scalability

There are three main types of macros:

- Standard Macros – Run once on the entire dataset

- Batch Macros – Run once per group or value

- Iterative Macros – Loop until a condition is met

In this post, I’ll walk through a simple example where we use a batch macro to group product data by store — and explain why this approach is so useful.

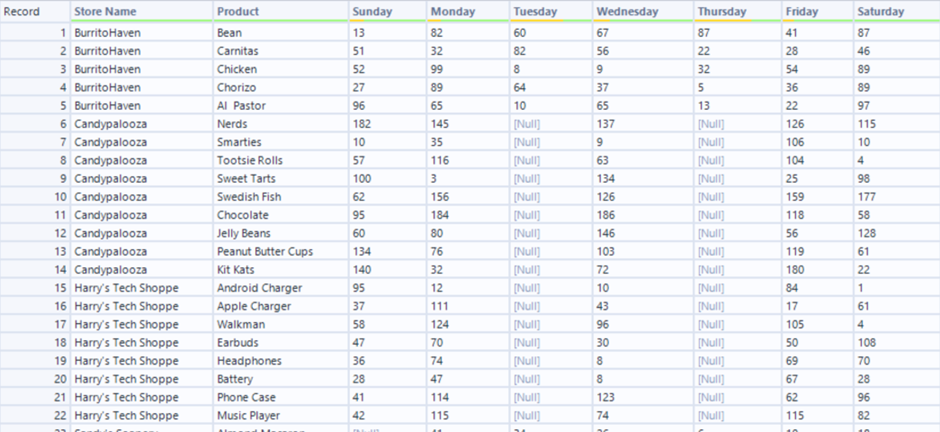

Let’s say we have a dataset with thousands of product records, and each product is tied to a store. We want to generate a unique output for each store that groups and summarizes its products.

Instead of filtering and running the same logic multiple times, we can build a batch macro to:

- Take each store name as a batch

- Apply the same summarization logic

- Return one clean result per store

Building the Macro

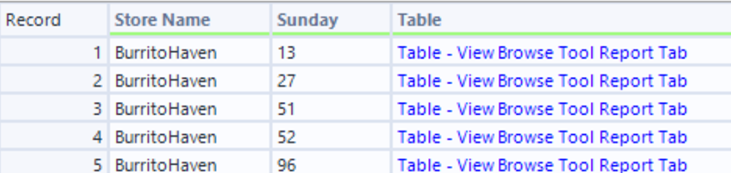

We started by taking a sample of product data and pasting it into a blank workflow. Once in Alteryx, we right-clicked the Input Data tool and selected "Convert to Macro Input". This replaces the standard input with a Macro Input tool, which is essential for building macros.

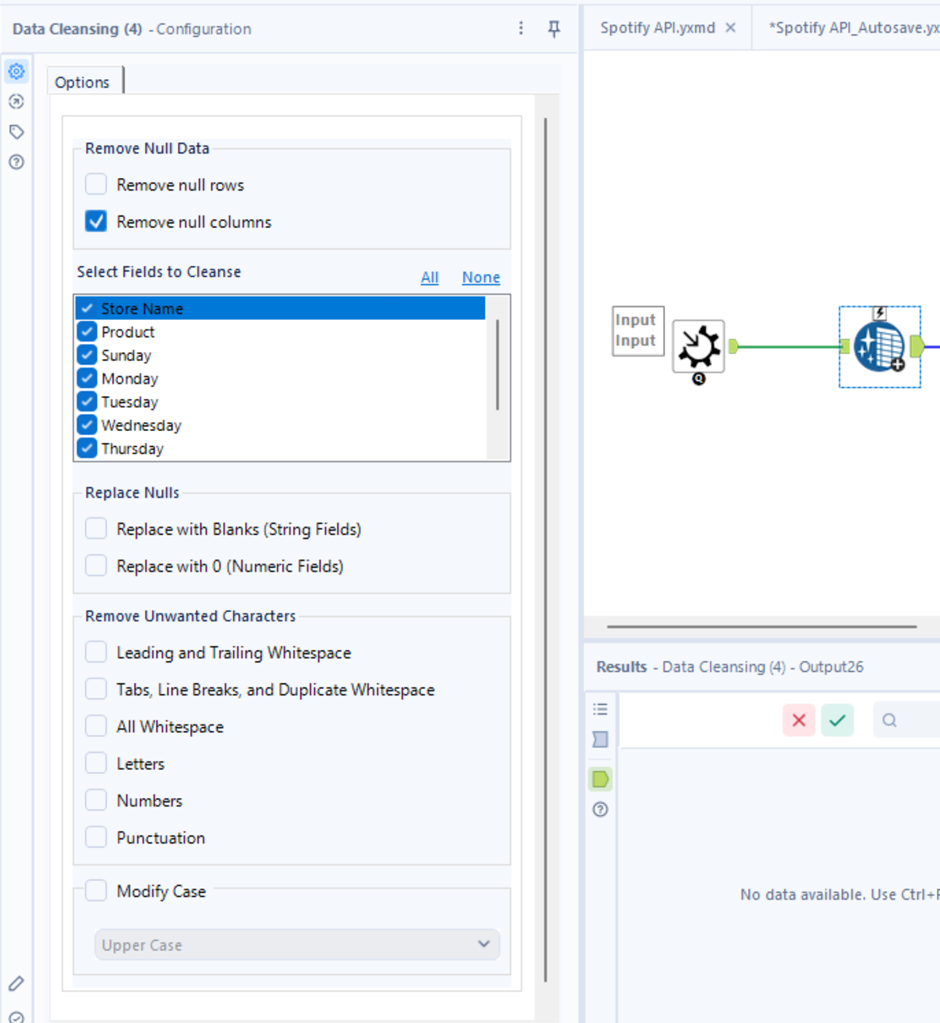

Before working with the dataset, we used the Data Cleansing tool to remove any null columns. This step helps streamline the data and ensures that unnecessary fields don’t cause errors downstream — especially important when looping through multiple batches.

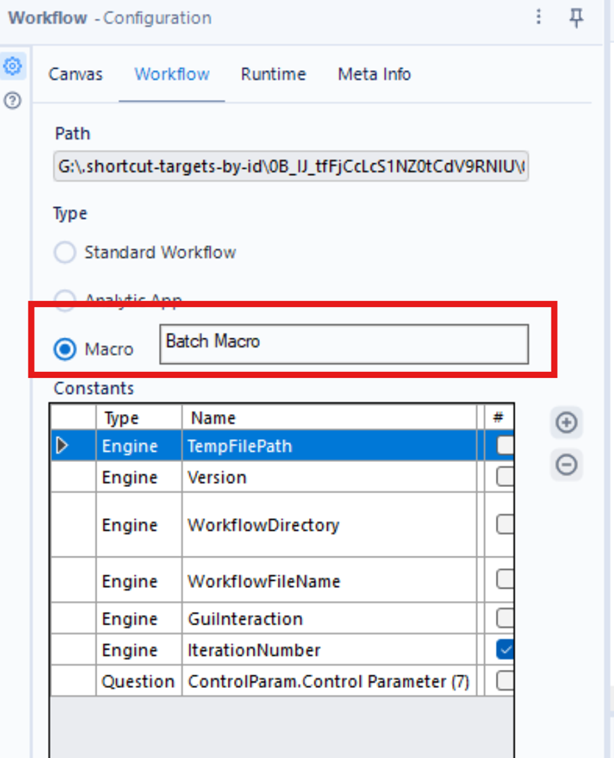

Before continuing, we clicked on a blank space within the workflow and went to the "Workflow" tab in the Configuration pane. From there, we changed the macro type to "Batch Macro".

This tells Alteryx that the macro should run once for every unique value that we pass through the control parameter which we will talk about later.

If this step is skipped, Alteryx will treat your macro like a standard one and run it only once on the full dataset.

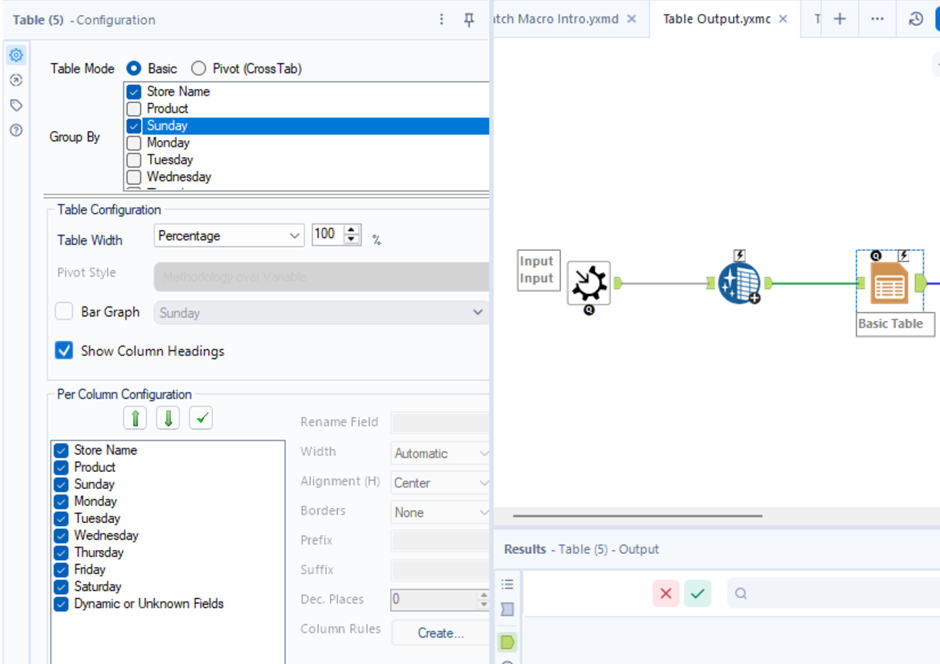

Next, we added the Basic Table tool, which is part of the Reporting toolset in Alteryx. This tool allows you to create simple, formatted tables suitable for exporting, reporting, or embedding into emails and PDFs.

We grouped this table by Store Name only. This ensures that each table we output later will contain data for a single store.

You can think of this step as defining the structure of each batch’s output.

To finish off the macro, we connected a Macro Output tool. This will output one grouped result per store — but only when we link the macro to a real dataset and enable batch logic.

Because our sample input only included one store, we only saw one output at this point. But the real magic happens when we reinsert the macro into a new workflow with multiple stores.

As mentioned previously we told Alteryx that the macro should run once for every unique value that is passed through a control parameter

This is where we tell the macro how to batch the data.

A Control Parameter in Alteryx defines what value the macro will loop through. In our case, we’ll use Store Name. When the macro is run, it will take the data for one store at a time, apply the logic inside the macro, and repeat this for each store in the dataset.

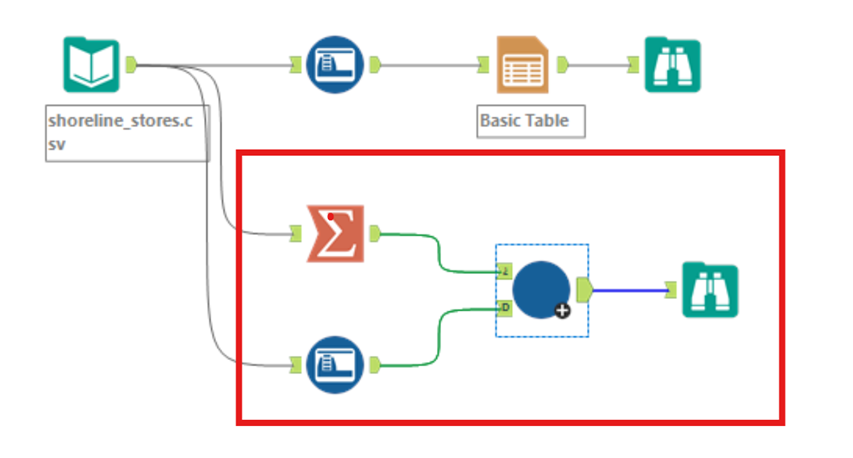

Once the macro was complete, we saved it as a .yxmc file and returned to our original workflow with the full dataset.

Back in the main workflow

- We used the Summarize tool to group our data by Store Name. This gives us one row per store, which acts as the control values for the macro.

- We used the Auto Field tool to automatically assign the correct field types for incoming data. This improves efficiency and ensures the data types don’t break the macro logic.

The Summarize tool was connected to the Control Parameter input (upside-down question mark) in the macro, and the Auto Field output was connected to the regular data input (labeled “D”).

When the macro runs, Alteryx:

- Takes one store at a time

- Passes that store’s data into the macro

- Applies the cleansing, grouping, and table logic

- Outputs the result

- Moves to the next store and repeats

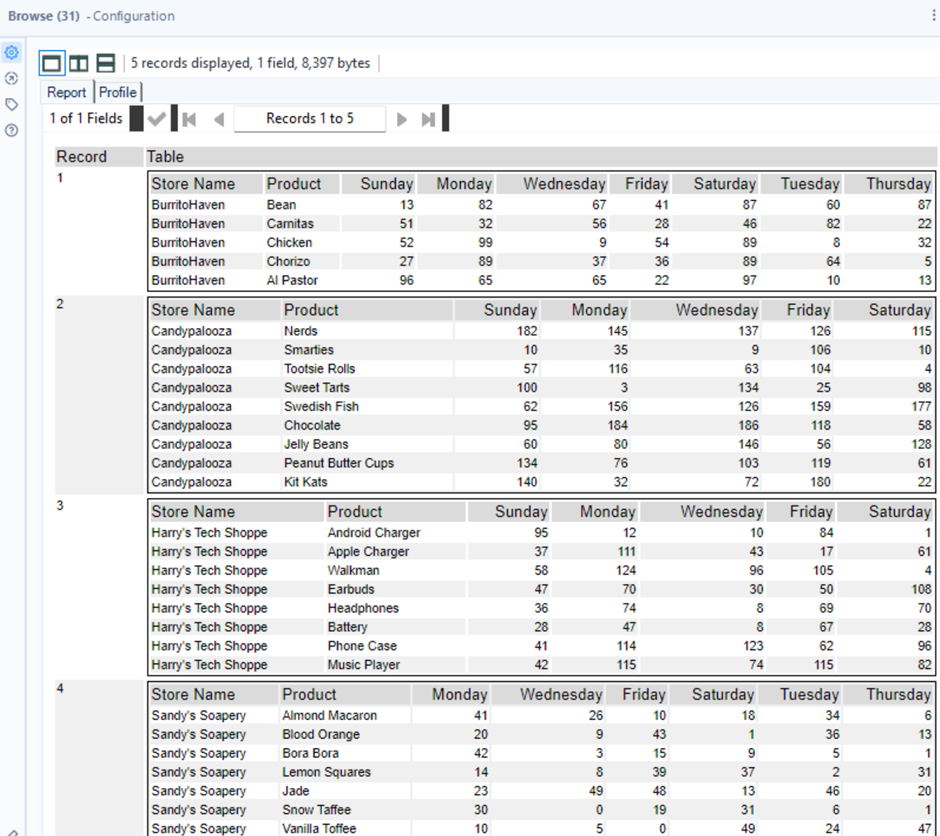

The result is a fully automated setup that:

- Processes data per store

- Applies consistent logic

- Outputs clean, formatted tables for each store individually

This is much faster, cleaner, and more scalable than creating manual filters or duplicating workflows.